LiDAR technology has finally entered the mobile tech space, with a majority of the world not even knowing. Cell phone makers have taken note of the growing need for accurate mapping and have already started incorporating this technology into mobile devices.

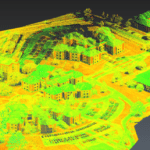

As a refresher, LiDAR stands for light detection and ranging. The technology uses a laser to measure distances and map objects.

A LiDAR sensor emits light pulses toward an object and captures the refracted light to create accurate 2D and 3D maps, and determine the distance and size of the object.

With Apple’s inclusion of the LiDAR scanner in the iPhone 12 Pro, this technology has become a part of everyday life.

In the past, LiDAR systems have been expensive and bulky, but with the advancement in technology, these sensors can even be found in our phones.

One of the main uses for LiDAR in phone cameras is to recognize a human’s face and make a 3D model of the scene.

For now, the iPhone 12 Pro, iPhone 13 Pro, and iPad Pro are the only cell phones (and mobile devices) with LiDAR sensors. While LiDAR systems were previously used in certain Samsung phones, Samsung has abandoned its use in their phones due to the lack of usage.

LiDAR is expected to become commonplace in smartphones and tablets in the next few years because most mobile device manufacturers tend to follow what is going on with Apple devices. But for now, Apple devices have an exclusive monopoly on the use of LiDAR.

Currently, LiDAR sensors in smartphones are mostly used for taking better photos by adding depth scanning techniques. However, there are lots of other uses of this technology.

For example, LiDAR technology generates a three-dimensional map of objects by scanning multiple reflections from the camera’s sensor. This is particularly useful in Augmented Reality applications, which are gaining traction every day.

Apple iPhone 12 Pro, iPhone 13 Pro, and Apple iPad Pro

Apple’s latest flagship devices are the only devices equipped with LiDAR sensors. When you turn the phone face down, you will notice a black circle at the bottom of the camera.

This black circle is Apple’s very own LiDAR sensor. It is used to enhance the quality of photos, and it opens up the possibilities of use of AR in the phone.

Apple has been using LiDAR tech in cell phones since 2020 with the launch of the iPhone 12 Pro. However, this feature is only available in Apple’s high-end Pro models. The normal iPhone 13 and the iPhone 13 Mini do not have the sensor.

LiDAR systems work off of the same principles in iPhones as it does in large-scale commercial uses. The system shoots waves of light and measures the time it takes for waves to bounce off an object and reach the phone.

The data it collects is then translated into a three-dimensional point cloud. These images are then processed by a computer to determine the depth of the object.

In order to detect depth, these cameras must identify matching points in the reflected pattern. The light pulses emitted by the sensor are invisible to human eyes. However, you can clearly see them with a night vision camera.

Here is a list of all the cell phones (and devices) that Apple produces that utilize LiDAR technology.

- iPhone 14 Pro Max

- iPhone 14 Pro

- iPhone 13 Pro Max

- iPhone 13 Pro

- iPhone 12 Pro

- iPhone 12 Pro Max

- iPad Pro (2020 Version & Later)

Apple uses LiDAR differently than most LiDAR sensors, like those used in autonomous vehicles and drones. Instead of focusing on the 3D mapping application of LiDAR, Apple uses this technology to enhance the abilities of their phone cameras and their capabilities.

One example of a cool way to use LiDAR in your Apple iPhone is to measure items and people. There’s a great article from CNET that outlines exactly how to do this.

While Apple uses LiDAR technology that works on the same principles as those who use it for mapping, it has certain limitations. The range of the LiDAR sensor in Apple devices is only around 5 meters, which isn’t enough for accurate 3D mapping of a large room or area.

The LiDAR on iPhones is more commonly used for personal uses like augmented reality uses and improving the accuracy of Face ID. There is more to LiDAR than meets the eye, and this technology will continue to advance.

Apple’s LiDAR scanner on the iPhone 12 Pro, iPhone 13 Pro, and iPad Pro will help to improve the photo quality, especially of pictures taken in the dark.

This is because the LiDAR system works just as well in low-light situations, so the phone knows where objects in the picture actually are, in comparison to one another.

While LiDAR improves the quality of your pictures, the principles also work to improve video quality.

This technology can be used to tell the phone’s camera how far away an object is in order to get the camera to focus properly. It’s used in conjunction with night mode to deliver extremely high-quality pictures and videos, especially in the dark.

LiDAR enables developers to create more realistic AR games and applications for the Apple platform.

LiDAR can be used to create a much more accurate representation of the room that someone is in, as opposed to its previous alternatives. Apple has incorporated LiDAR on iPhones and iPads as a part of its AR platform ARKit.

Do Android Phones Have LiDAR?

There are not currently any android phones that are being produced with LiDAR technology. There were a few Android devices that had LiDAR, but the use of LiDAR was discontinued due to the large cost of LiDAR in smartphones, and the lack of response from its users.

Samsung used to use rear-facing LiDAR technology in some of its phones, like the Galaxy S10 5G, S20+, S20, and SOCELL Vizion 33D models. This feature was meant to improve the camera focus, and support augmented reality applications, the same way that it does in Apple devices.

LiDAR was used in android devices like the Samsung S20 for a “Time-of-Flight” camera that used light to scan objects and measure distance. It was a simpler version of the LiDAR sensor, but not as high-quality as the LiDAR used in Apple.

Following the launch of the Galaxy S21, Samsung dropped the use of LiDAR sensors in their phones. The company has continually decided to scrap the technology from all their smartphones since the S21.

Samsung was hoping the new sensor would boost its market share in the smartphone space, but its lack of application has kept it from gaining wider adoption.

The Galaxy S22 also does not come with a LiDAR sensor, which has proven to be an expensive option for Samsung. Samsung believes that the 3D ToF sensor did not offer enough to the front-end consumer, and ultimately decided that the technology is not worth the extra investment.

Since Samsung has abandoned LiDAR, no other android smartphone companies have stepped forward to introduce this technology. Apple is currently the only smartphone manufacturer to use LiDAR, and LiDAR is expected to be a feature of the iPhone 14.

However, the continually-increasing demand for Augmented Reality applications and advancements is also increasing the demand for LiDAR. Because of this, it’s possible that Android smartphones will soon be using LiDAR again.

What is LiDAR Used For in Cell Phones?

Even though LiDAR technology was first developed for commercial applications back in the 90’s to deliver sets of data from a wide variety of sources, today we can even find the technology in our cell phones.

The LiDAR then wasn’t as advanced as what you’ll find in Apple cell phones today, because it’s evolved to meet consumer demands. LiDAR has lots of uses in the smartphone industry.

The introduction of LiDAR sensors in Apple devices has helped improve the photo and video quality of iPhone cameras, especially at night.

This is because the LiDAR is used to help tell the camera which objects need to be focused on, which the camera finds more difficult in low-light conditions. LiDAR is also used in smartphones for AR apps and uses.

Augmented Reality

With the growing popularity of augmented reality in cell phones, the world of LiDAR is growing at a correlated pace. Augmented reality works by overlaying digital information with the real world.

Users can experience augmented reality by wearing a headset, but smartphones are making the technology even more accessible for your everyday AR. Using augmented reality in a smartphone makes it easier to experience the same benefits that an AR headset offers.

Apple uses a LiDAR sensor in iPhone to enhance its augmented reality capabilities. They have long been an innovator in this technology, including in the creation of new augmented reality apps.

While a decent traditional LiDAR sensor is generally on the larger size, the technology that Apple uses is very small, and fits in the small space around the camera.

Apple opted to put an operational LiDAR sensor inside the camera module found in the iPhone 12 Pro. This decision greatly improves the AR capabilities of the mobile device.

The LiDAR sensor in iPhone Pro models and iPad Pro allows its users to utilize the AR apps much more efficiently and create better maps of the room to add virtual objects.

The Apple Arcade is expanding its library of AR games quickly, which is thanks to the development of the Apple LiDAR system.

With the continual improvement of LiDAR and AR in Apple products, you can expect a lot more AR apps to soon be launched on the iOS platform.

The LiDAR sensor in the iPhone 12 Pro’s improved camera could change the way you use the camera. With better placement and occlusion, LiDAR will allow for new creative forms of AR apps.

The LiDAR sensor in the iPhone is an incredibly powerful tool for creating accurate environment maps and 3D wireframes of rooms.

The speed and accuracy that LiDAR can deliver will allow developers to produce even more realistic augmented reality applications faster. Its potential is so vast that the capabilities of the LiDAR sensor are virtually endless.

Some even guess that Apple devices are going to replace AR headsets as they offer the same benefits. It will also pave a path for Apple’s VR/AR headset, which you can use with the phone to enhance the AR capabilities of various apps.

Measurements

Another use of LiDAR sensors in cell phones is that they can help people save time and effort by enabling a person to take measurements wherever they are.

The iPhone can now be turned into a fairly precise measuring tape, and the camera’s LiDAR scanner is capable of taking measurements of various objects from up to five meters away.

The LiDAR system collects information from the outside and processes it with artificial intelligence. Cell phones that are equipped with LiDAR will be able to recognize objects of interest with much higher accuracy than previous models.

Furthermore, LiDAR will make it possible for cell phones to calculate distances between objects with a much smaller margin of error.

Apple introduced LiDAR scanning to its mobile phone lineup in 2020 with the launch of the iPhone 12 Pro. The addition of the LiDAR sensor allows the iPhone to quickly determine the distance traveled, and the distance between other objects, by sending pulsed light waves into its environment.

The iPhone’s LiDAR sensor is used in the “Measure” app, to measure the height of a person. It’s also useful when measuring objects around the home.

Even though the Measure app can be used on nearly all iPhone models running iOS 12 and later, the app works best with the latest iPhones that have LiDAR sensors.

To measure the size of objects, you just need to open the Measure App and position the phone in such a way that the object is captured within the screen.

Once the phone processes that this is an object of interest, a line will appear from top to bottom and show details of the height. You can also take a picture of the object, so you can figure out its height.

Taking Better Photos in the Dark

Even though cell phones, in general, have gotten better at taking pictures in low light conditions, a phone that utilizes a LiDAR system with its camera is leaps and bounds ahead.

Using a combination of phase and contrast autofocus, it is able to recognize objects and distances in a much more precise manner than those without LiDAR systems.

This is because a standard iPhone, like the iPhone 12, uses only a single camera for detecting depth. But the iPhone 12 Pro utilizes two cameras/sensors; i.e., a normal camera + LiDAR sensor.

You can clearly see the difference in the pictures with and without LiDAR, as these sensors significantly improve the quality of photos.

The LiDAR works at sensing where objects in the picture are, and helps the camera focus on the objects that should be focused on.

Compared to smartphones that don’t use LiDAR, Apple’s “Pro” iPhone models are able to capture more light and take a crisper picture. This feature allows iPhones to take better photos in dark situations.

Besides improving the camera’s sensitivity, the new LiDAR sensor helps the iPhone’s exposure performance. Night portraits pose a big exposure problem.

Since the foreground is brighter than the background, reducing the exposure would result in gray-gray fuzziness. The LiDAR sensor overcomes this problem by detecting the difference between the two.

Apart from taking better photos in the dark, the LiDAR sensor in cell phones brings 3D scanning capabilities to our phones as well. LiDAR has become a part of the cell phone market, and it will likely stick around for a while.

It serves some great purposes, like enhancing photo quality, running AR apps, and measuring the size of objects, without the need for a measuring tape.